cross-compilable and bootstrappable Debian

Sun, 03 Jun 2012 14:21 categories: debianWhen packaging software for Debian, there exist two important assumptions:

- Compilation is done natively

- Potentially all of Debian is available at compile time

Both assumptions make the life of a package maintainer much easier and they do not create any problem unless you are one of the unlucky few who want to run Debian on an architecture that it does not yet exist for.

You will then have to use either cross compile a set of base packages (which is hard because packages are built and tested to built natively, not cross - perl is a big blocker of building the minimal set of packages cross but through multiarch other packages become easier to cross build) or use other distributions like OpenEmbedded or Gentoo which you compiled (or retrieved otherwise) for that new architecture to hack a core of Debian source packages until they build a minimal Debian system that you can chroot into and continue natively building the rest of it. But even if you manage to get that far you will continue to be plagued by cyclic build and runtime dependencies. So you start to hack source packages so that they drop some dependencies and you can break enough cycles to advance step by step.

The Debian ports page lists 24 ports of Debian, so despite its unpleasant nature, porting it is something that is not done seldom.

The process as laid out above has a number of drawbacks:

- The process is mostly manual and reinvented every time it is done.

- If you can't cross compile something, then you need another distribution for the bootstrapping process. Debian itself should be sufficient.

- Its complexity and manual nature prevents architectures with little workforce behind them from catching up to the main archive.

- It also avoids that Debian exists in CPU optimized sub-arch builds.

If Debian would provide a set of core packages that are cross-compilable and which suffice for a minimal foreign build system, and if it would also have enough source packages that provide a reduced build dependency set so that all dependency cycles can be broken, building Debian for a yet unknown architecture could be mostly automated.

The benefits would be:

- Putting Debian on a foreign architecture would (in the best case) boil down to making the code cross-compile for and native-compile on that architecture.

- Debian would not need any other distribution to be ported to a different architecture. This would make Debian even more "universal".

- Lagging architectures can be more easily updated or rebooted than when they were initially created.

- Debian optimized for specific CPUs (Raspberry Pie, OpenMoko...) would be more attractive.

With three of this year's GSoC projects, this dream seems to come into reach.

There is the "Multiarch Cross-Toolchains" project by Thibaut Girka and mentored by Hector Oron and Marcin Juszkiewicz. Cross-compiling toolchains need packages from the foreign architecture to be installed alongside the native libraries. Cross-compiler packages have been available through the emdebian repositories but always were more of a hack. With multiarch, it is now possible to install packages from multiple architectures at once, so that cross-compilation toolchains can be realized in a proper manner and therefor can also enter the main archives. Besides creating multiarch enabled toolchains, he will also be responsible for making them build on the Debian builld system as cross-architecture dependencies are not yet supported.

There is also the "Bootstrappable Debian" project by Patrick "P. J." McDermott and mentored by Wookey and Jonathan Austin. He will make a small set of source packages multiarch cross-compilable (using cross-compilers provided by Thibaut Girka) and add a Build-Depends-StageN header to critical packages so that they can be built with reduced build dependencies for breaking dependency cycles. He will also patch tools as necessary to recognize the new control header.

And then there is my project: "Port bootstrap build-ordering tool" (Application). It is mentored by Wookey and Pietro Abate. In contrast to the other two, my output will be more on the meta-level as I will not modify any actual Debian package or patch Debian tools with more functionality. Instead the goal of this project is threefold:

- find the minimal set of source packages that have to be cross compiled

- help the user to find packages that are good candidates for breaking build dependency cycles through added staged build dependencies or by making them cross-compilable

- develop a tool that takes the information about packages that can be cross compiled or have staged build dependencies to output an ordering with which packages must be built to go from nothing to a full archive

More on that project in my follow-up post.

setting up mailman, postfix, lighttpd

Fri, 25 May 2012 07:42 categories: debianI was worried about having to learn hundreds of configuration options to properly set up mailman, postfix and lighttpd on Debian Squeeze. Turned out, that except for lighttpd it all works out of the box.

apt-get install postfix

When asked by debconf, I specified lists.mister-muffin.de as the fully qualified domain name.

apt-get install mailman

newlist mailman

The newlist command reminds me that I have to add its output to

/etc/aliases. After doing so, I have to run:

newaliases

From now on, I can add any mailinglist by running newlist, editing

/etc/aliases and running newaliases.

Mailinglists can also be added through the mailman webinterface but one still

has to put the according entries into /etc/aliases.

Following is a working lighttpd configuration that works out of the box with the default settings of mailman on Debian squeeze.

This was the only part that caused me some headaches.

server.modules += ("mod_alias", "mod_cgi", "mod_accesslog")

$HTTP["host"] == "lists.mister-muffin.de" { accesslog.filename =

accesslog.filename = "/var/log/lighttpd/lists-access-log"

alias.url += (

"/cgi-bin/mailman/private/" => "/var/lib/mailman/archives/private/",

"/cgi-bin/mailman/public/" => "/var/lib/mailman/archives/public/",

"/pipermail/" => "/var/lib/mailman/archives/public/",

"/cgi-bin/mailman/"=> "/var/lib/mailman/cgi-bin/",

"/images/mailman/" => "/usr/share/images/mailman/",

)

cgi.assign = (

"/admin" => "",

"/admindb" => "",

"/confirm" => "",

"/create" => "",

"/edithtml" => "",

"/listinfo" => "",

"/options" => "",

"/private" => "",

"/rmlist" => "",

"/roster" => "",

"/subscribe" => "")

}

server.document-root = "/var/www"

server.errorlog = "/var/log/lighttpd/error.log"

server.pid-file = "/var/run/lighttpd.pid"

server.username = "www-data"

server.groupname = "www-data"

index-file.names = ( "index.html" )

server.dir-listing = "disable"

include_shell "/usr/share/lighttpd/create-mime.assign.pl"

As a bonus, I wanted to import my existing email exchange with my GSoC mentors into the mailinglist. First I was planning on manually sending the email messages to the list, but a much easier option is to just import them in mbox format.

To extract all email messages, I first wrote the following python snippet:

import mailbox, itertools

box = mailbox.mbox('~/out')

for message in itertools.chain(mailbox.mbox('~/sent'), mailbox.Maildir('~/Mail/Web/', factory=None)):

if (("wookey" in message.get('to', "").lower()

or "wookey" in message.get('cc', "").lower()

or "wookey" in message.get('from', "").lower()

or "abate" in message.get('to', "").lower()

or "abate" in message.get('cc', "").lower()

or "abate" in message.get('from', "").lower())

and not message['subject'][0] == '['

and not message['subject'] == "multistrap"):

box.add(message)

box.close()

It iterates through messages in my mbox and maildir mailboxes, filters them for

emails by wookey or pietro, strips away some messages I found to not be

relevant and then saves the filtered result into the mbox mailbox ~/out.

It is important to specify factory=None for the Maildir parser, because it

otherwise defaults to rfc822.Message instead of MaildirMessage.

Also do not forget to call box.close(). I initially forgot to do so and ended

up with missing messages in ~/out.

I then copy the archive in its place:

scp out lists.mister-muffin.de:/var/lib/mailman/archives/private/debian-bootstrap.mbox/debian-bootstrap.mbox

Another thing that initially caused me trouble, was that the mbox didnt have the correct permissions due to the scp. Fixing them:

chown -R list:www-data /var/lib/mailman/archives/private/

chmod 664 /var/lib/mailman/archives/private/debian-bootstrap.mbox/debian-bootstrap.mbox

And update the mailman archive like this:

sudo -u list /usr/lib/mailman/bin/arch debian-bootstrap /var/lib/mailman/archives/private/debian-bootstrap.mbox/debian-bootstrap.mbox

Initially I was running the above command as root which screws up permissions as well.

sisyphus wins ICRA 2012 VMAC

Mon, 21 May 2012 09:48 categories: blogSisyphus is a piece of software that I wrote as a member of a team from Jacobs University led by Prof. Dr. Andreas Nüchter. It managed to place our team first in this year's IEEE ICRA 2012 Virtual Manufacturing Automation Competition in all three rounds.

The goal was, to stack a given set of boxes of different length, height and width on a pallet in a way that achieved optimal volume utilization, center of mass and interlock of the boxes. Besides the cartesian placement of a box on the pallet, the only other degree of freedom was a 90° rotation of the box around a vertical axis.

Since the arrangement of boxes into a three dimensional container is NP hard (three dimensional orthogonal knapsack), I decided for a heuristic for an approximate solution.

The premise is, that there are many boxes of equal height which was the case in the test cases that were available from the 2011 VMAC.

Given this premise, my heuristic was, to arrange the boxes into layers of equal height and then stack these layers on top of each other. A set of boxes that would be left over or too little from the start to form its own full layer, would then be stacked on the top of the completed layers.

There is a video of how this looked like.

My code is now online on github and it even documented for everybody who is not me (or a potential future me of course).

This blog post is about the "interesting" parts of sisyphus. You can read about the overall workings of it in the project's README.

Python dict to XML and XML to Python dict

The evaluation program for the challenge is reading XML files and the pallet size and the list of articles with their sizes are also given in XML format. So I had to have a way to easily read article information from XML and to easily dump my data into XML format.

Luckily, all the XML involved was not making use of XML attributes at all, so the only children a node had, where other nodes. Thus, the whole XML file could be represented as an XML dictionary with keys being tagnames and the values being other dictionaries or lists or strings or integers.

The code doing that uses xml.etree.ElementTree and turns out to be very

simple:

from xml.etree import ElementTree

def xmltodict(element):

def xmltodict_handler(parent_element):

result = dict()

for element in parent_element:

if len(element):

obj = xmltodict_handler(element)

else:

obj = element.text

if result.get(element.tag):

if hasattr(result[element.tag], "append"):

result[element.tag].append(obj)

else:

result[element.tag] = [result[element.tag], obj]

else:

result[element.tag] = obj

return result

return {element.tag: xmltodict_handler(element)}

def dicttoxml(element):

def dicttoxml_handler(result, key, value):

if isinstance(value, list):

for e in value:

dicttoxml_handler(result, key, e)

elif isinstance(value, basestring):

elem = ElementTree.Element(key)

elem.text = value

result.append(elem)

elif isinstance(value, int) or isinstance(value, float):

elem = ElementTree.Element(key)

elem.text = str(value)

result.append(elem)

elif value is None:

result.append(ElementTree.Element(key))

else:

res = ElementTree.Element(key)

for k, v in value.items():

dicttoxml_handler(res, k, v)

result.append(res)

result = ElementTree.Element(element.keys()[0])

for key, value in element[element.keys()[0]].items():

dicttoxml_handler(result, key, value)

return result

def xmlfiletodict(filename):

return xmltodict(ElementTree.parse(filename).getroot())

def dicttoxmlfile(element, filename):

ElementTree.ElementTree(dicttoxml(element)).write(filename)

def xmlstringtodict(xmlstring):

return xmltodict(ElementTree.fromstring(xmlstring))

def dicttoxmlstring(element):

return ElementTree.tostring(dicttoxml(element))

Lets try this out:

>>> from util import xmlstringtodict, dicttoxmlstring

>>> xmlstring = "<foo><bar>foobar</bar><baz><a>1</a><a>2</a></baz></foo>"

>>> xmldict = xmlstringtodict(xmlstring)

>>> print xmldict

{'foo': {'baz': {'a': ['1', '2']}, 'bar': 'foobar'}}

>>> dicttoxmlstring(xmldict)

'<foo><baz><a>1</a><a>2</a></baz><bar>foobar</bar></foo>'

The dict container doesnt preserve order, but as XML doesnt require that, this is also not an issue.

Arranging items in layers

When it was decided, that I wanted to take the layered approach, it boiled down the 3D knapsack problem to a 2D knapsack problem. The problem statement now was: how to best fit small rectangles into a big rectangle?

I decided for a simple and fast approach as it is explained in Jake Gordon's

blog article. There is

a demo of his

code and should the site vanish from the net, the code is on

github. This solution seemed to

generate results that were "good enough" while simple to implement and fast to

execute. If you look very hard, you can still see some design similarities

between my arrange_spread.py and his packer.js code.

Jake Gordon got his idea from Jim Scott who wrote an article of arranging randomly sized lightmaps into a bigger texture.

There is also an ActiveState Code recipe from 2005 which looks very similar to the code by Jake Gordon.

The posts of Jake Gordon and Jim Scott explain the solution well, so that I dont have to repeat it. Should the above resources go offline, I made backups of them here and here. There is also a backup of the ActiveState piece here.

Spreading items out

The algorithm above would cram all rectangles into a top-left position. As a result, there would mostly be space at the bottom and left edge of the available pallet surface. This is bad for two reasons:

- the mass is distributed unequally

- articles on the layer above at the bottom or left edge, are prone to overhang too much so that they tumble down

Instead all articles should be spread over the available pallet area, creating small gaps between them instead big spaces at the pallet borders.

Since articles were of different size, it was not clear to me from the start what "equal distribution" would even mean because it was obvious that it was not as simple as making the space between all rectangles equal. The spacing had to be different between them to accommodate for differently sized boxes.

The solution I came up with, made use of the tree structure, that was built by the algorithm that arranged the rectangles in the first place. The idea is, to spread articles vertically first, recursively starting with the deepest nodes and spreading them out in their parent rectangle. And then spreading them horizontally, spreading the upper nodes first, recursively resizing and spreading child nodes.

The whole recursion idea created problems of its own. One of the nicest recursive beauty is the following function:

def get_max_horiz_nodes(node):

if node is None or not node['article']:

return [], []

elif node['down'] and node['down']['article']:

rightbranch, sr = get_max_horiz_nodes(node['right'])

rightbranch = [node] + rightbranch

downbranch, sd = get_max_horiz_nodes(node['down'])

ar = rightbranch[len(rightbranch)-1]['article']

ad = downbranch[len(downbranch)-1]['article']

if ar['x']+ar['width'] > ad['x']+ad['width']:

return rightbranch, sr+[downbranch[0]]

else:

return downbranch, sd+[rightbranch[0]]

else:

rightbranch, short = get_max_horiz_nodes(node['right'])

return [node] + rightbranch, short

get_max_horiz_nodes() traverses all branches of the tree that node has

below itself and returns a tuple containing the list of nodes that form the

branch that stretches out widest plus the list of nodes that are in the other

branches (which are shorter than the widest).

Another interesting problem was, how to decide on the gap between articles. This was interesting because the number resulting of the subtraction of the available length (or width) and the sum of the articles lengths (or widths), was mostly not divisible by the amount of gaps without leaving a rest. So there had to be an algorithm that gives me a list of integers, neither of them differing by more than one to any other, that when summed up, would give me the total amount of empty space. Or in other words: how to divide a number m into n integer pieces such that each of those integers doesnt differ more than 1 from any other.

Surprisingly, generating this list doesnt contain any complex loop constructs:

>>> m = 108 # total amount

>>> n = 7 # number of pieces

>>> d,e = divmod(m, n)

>>> pieces = (e)*[(d+1)]+(n-e)*[d]

>>> print pieces

[16, 16, 16, 15, 15, 15, 15]

>>> sum(pieces) == m

True

>>> len(pieces) == n

True

You can test out the algorithms that arrange rectangles and spread them out by cloning the git and then running:

PYTHONPATH=. python legacy/arrange_spread.py

The results will be svg files test1.svg and test2.svg, the latter showing the spread-out result.

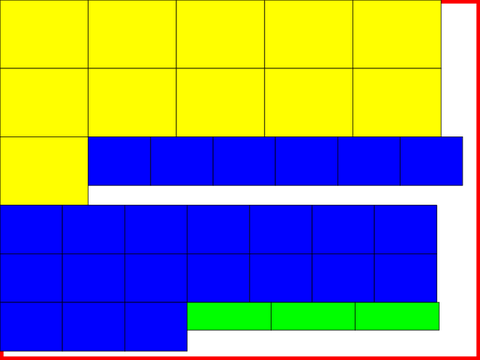

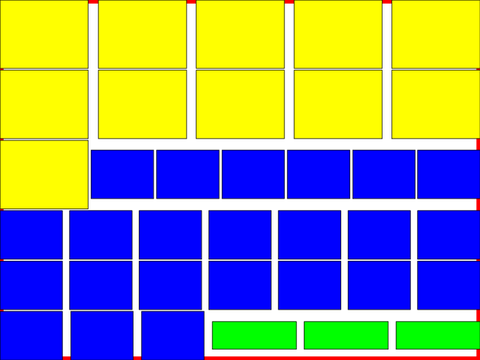

Here is an example how the output looks like (without the red border which is drawn to mark the pallet border):

arrange_spread2.py contains an adaption of arrange_spread.py for the actual

problem.

Permutations without known length

When creating a layer out of articles of same height, then there are four strategies that I can choose from. It is four because there are two methods that I can either use or not. I can rotate the article by 90° per default or not and I can rotate the pallet or not.

So every time that I build a new layer, there are those four options. Depending on which strategy I choose, there is a different amount of possible leftover articles that did not fit into any layer. The amount is different because each strategy is more or less efficient.

To try out all combinations of possible layer arrangements, I have to walk through a tree where at each node I branch four times for each individual strategy. Individual neighboring nodes might be the same but this outcome is unlikely due to the path leading to those neighboring nodes being different.

To simplify, lets name the four possible strategies for each layers 0, 1, 2 and

3. I now want an algorithm that enumerates through all possible permutations of

those four numbers for "some" length. This is similar to counting. And the

itertools module comes with the product() method that nearly does what I

want. For example, should I know that my tree does not become deeper than 8

(read: no more than eight layers will be built), then I can just run:

>>> for i in itertools.product([0,1,2,3], repeat=8):

... print i

...

(0,0,0,0,0,0,0,0)

(0,0,0,0,0,0,0,1)

(0,0,0,0,0,0,0,2)

(0,0,0,0,0,0,0,3)

(0,0,0,0,0,0,1,0)

(0,0,0,0,0,0,1,1)

(0,0,0,0,0,0,1,2)

(0,0,0,0,0,0,1,3)

This would work if the number of layers created with each strategy was the same. But as each strategy behaves differently depending on the input, it cannot be known before actually trying out a sequence of strategies, how many layers it will yield.

The strategy (0,0,0,0,0,0,0,0) might create 7 layers, resulting in (0,0,0,0,0,0,0,1), (0,0,0,0,0,0,0,2) and (0,0,0,0,0,0,0,3) yielding the same output as only the first 7 strategies count. This would create duplicates which I should not waste cpu cycles on later.

It might also be that (0,0,0,0,0,0,1,0) turns out to be a combination of strategies that creates more than 8 layers in which case the whole thing fails.

So what I need is a generator, that gives me a new strategy depending on how often it is asked for one. It should dynamically extend the tree of possible permutations to accommodate for any size.

Since the tree will become humongous (4^11 = 4194304), already traversed nodes should automatically be cleaned so that only the nodes that make the current list of strategies stays in memory at any point in time.

This sounded all complicated which made me even more surprised by how simple the solution was in the end.

Here a version of the algorithm that could easily be ported to C:

class Tree:

def __init__(self, branch_factor):

self.branch_factor = branch_factor

self.root = {"value": None, "parent": None, "children": []}

self.current = self.root

def next(self):

if not self.current["children"]:

self.current["children"] = [{"value":val, "parent":self.current, "children":[]} for val in range(self.branch_factor)]

self.current = self.current["children"][0]

return self.current["value"]

def reset(self):

if self.current["parent"]:

self.current["parent"]["children"].pop(0)

else:

return False

if self.current["parent"]["children"]:

self.current = self.root

return True

else:

self.current = self.current["parent"]

return self.reset()

def __str__(self):

return str(self.root)

It would be used like this:

>>> tree = Tree(4)

>>> print tree.next(), tree.next(), tree.next()

>>> while tree.reset():

... print tree.next(), tree.next(), tree.next()

Which would be equivalent to calling itertools.product([1,2,3,4], 3). The

special part is, that in each iteration of the loop I can call tree.next() an

arbitrary amount of times, just how much it is needed. Whenever I cannot

generate an additional layer anymore, I can call tree.reset() to start a new

permutation.

For my code I used a python specific version which is a generator:

def product_varlength(branch_factor):

root = {"value": None, "parent": None, "children": []}

current = root

while True:

if not current["children"]:

current["children"] = [{"value":val, "parent":current, "children":[]} for val in range(branch_factor)]

current = current["children"][0]

if (yield current["value"]):

while True:

if current["parent"]:

current["parent"]["children"].pop(0)

else:

return

if current["parent"]["children"]:

current = root

break

else:

current = current["parent"]

It is used like this:

it = product_varlength(4)

print it.next(), it.send(False), it.send(False)

while True:

print it.send(True), it.send(False), it.send(False)

Again, the expression in the loop can have any number of it.send(False). The

first it.send(True) tells the generator to do a reset.

network file transfer to marvell kirkwood

Sat, 19 May 2012 01:06 categories: codeI have a Seagate GoFlex Net with two 2TB harddrives attached to it via SATA. The device itself is connected to my PC via its Gigabit Ethernet connection. It houses a Marvell Kirkwood at 1.2GHz and 128MB. I am booting Debian from a USB stick connected to its USB 2.0 port.

The specs are pretty neat so I planned it as my NAS with 4TB of storage being attached to it. The most common use case is the transfer of big files (1-10 GB) between my laptop and the device.

Now what are the common ways to achieve this?

scp:

scp /local/path user@goflex:/remote/path

rsync:

rsync -Ph /local/path user@goflex:/remote/path

sshfs:

sshfs -o user@goflex:/remote/path /mnt

cp /local/path /mnt

ssh:

ssh user@goflex "cat > /remote/path" < /local/path

I then did some benchmarks to see how they perform:

scp: 5.90 MB/s

rsync: 5.16 MB/s

sshfs: 5.05 MB/s

ssh: 5.42 MB/s

Since they all use ssh for transmission, the similarity of the result does not come as a surprise and 5.90 MB/s are also not too shabby for a plain scp. It means that I can transfer 1 GB in a bit under three minutes. I could live with that. Even for 10 GB files I would only have to wait for half an hour which is mostly okay since it is mostly known well in advance that a file is needed.

As adam points out in the comments, I can also use try using another cipher than AES for ssh. So I tried to use arcfour and blowfish-cbc.

scp+arcfour: 12.4MB/s

scp+blowfish: 9.0MB/s

But lets see if we can somehow get faster than this. Lets analyze where the bottleneck is.

Lets have a look at the effective TCP transfer rate with netcat:

ssh user@goflex "netcat -l -p 8000 > /dev/null"

dd if=/dev/zero bs=10M count=1000 | netcat goflex 8000

79.3 MB/s wow! Can we get more? Lets try increasing the buffer size on both ends. This can be done using nc6 with the -x argument on both sides.

ssh user@goflex "netcat -x -l -p 8000 > /dev/null"

dd if=/dev/zero bs=10M count=1000 | netcat -x gloflex 8000

103 MB/s okay this is definitely NOT the bottleneck here.

Lets see how fast I can read from my harddrive:

hdparm -tT /dev/sda

114.86 MB/s.. hmm... and writing to it?

ssh user@goflex "time sh -c 'dd if=/dev/zero of=/remote/path bs=10M count=100; sync'"

42.93 MB/s

Those values are far faster than my puny 5.90 MB/s I get with scp. A look at the CPU usage during transfer shows, that the ssh process is at 100% CPU usage the whole time. It seems the bottleneck was found to be ssh and the encryption/decryption involved.

I'm transferring directly from my laptop to the device. Not even a switch is in the middle so encryption seems to be quite pointless here. Even authentication doesnt seem to be necessary in this setup. So how to make the transfer unencrypted?

The ssh protocol specifies a null cipher for not-encrypted connections. OpenSSH doesnt support this. Supposedly, adding

{ "none", SSH_CIPHER_NONE, 8, 0, 0, EVP_enc_null }

to cipher.c adds a null cipher but I didnt want to patch around in my installation.

So lets see how a plain netcat performs.

ssh user@goflex "netcat -l -p 8000 > /remote/path"

netcat goflex 8000 < /local/path

32.9 MB/s This is far better! Lets try a bigger buffer:

ssh user@goflex "netcat -x -l -p 8000 > /remote/path"

netcat -x goflex 8000 < /local/path

37.8 MB/s now this is far better! My Gigabyte will now take under half a minute and my 10 GB file under five minutes.

But it is tedious to copy multiple files or even a whole directory structure with netcat. There are far better tools for this.

An obvious candidate that doesnt encrypt is rsync when being used with the rsync protocol.

rsync -Ph /local/path user@goflex::module/remote/path

30.96 MB/s which is already much better!

I used the following line to have the rsync daemon being started by inetd:

rsync stream tcp nowait root /usr/bin/rsync rsyncd --daemon

But it is slower than pure netcat.

If we want directory trees, then how about netcatting a tarball?

ssh user@goflex "netcat -x -l -p 8000 | tar -C /remote/path -x"

tar -c /local/path | netcat goflex 8000

26.2 MB/s so tar seems to add quite the overhead.

How about ftp then? For this test I installed vsftpd and achieved a speed of 30.13 MB/s. This compares well with rsync.

I also tried out nfs. Not surprisingly, its transfer rate is up in par with rsync and ftp at 31.5 MB/s.

So what did I learn? Lets make a table:

| method | speed in MB/s |

|---|---|

| scp | 5.90 |

| rsync+ssh | 5.16 |

| sshfs | 5.05 |

| ssh | 5.42 |

| scp+arcfour | 12.4 |

| scp+blowfish | 9.0 |

| netcat | 32.9 |

| netcat -x | 37.8 |

| netcat -x | tar | 26.2 |

| rsync | 30.96 |

| ftp | 30.13 |

| nfs | 31.5 |

For transfer of a directory structure or many small files, unencrypted rsync seems the way to go. It outperforms a copy over ssh more than five-fold.

When the convenience of having the remote data mounted locally is needed, nfs outperforms sshfs at speeds similar to rsync and ftp.

As rsync and nfs already provide good performance, I didnt look into a more convenient solution using ftp.

My policy will now be to use rsync for partial file transfers and mount my remote files with nfs.

For transfer of one huge file, netcat is faster. Especially with increased buffer sizes it is a quarter faster than without.

But copying a file with netcat is tedious and hence I wrote a script that simplifies the whole remote-login, listen, send process to one command. First argument is the local file, second argument is the remote name and path just as in scp.

#!/bin/sh -e

HOST=${2%%:*}

USER=${HOST%%@*}

if [ "$HOST" = "$2" -o "$USER" = "$HOST" ]; then

echo "second argument is not of form user@host:path" >&2

exit 1

fi

HOST=${HOST#*@}

LPATH=$1

LNAME=`basename "$1"`

RPATH=`printf %q ${2#*:}/$LNAME`

ssh "$USER@$HOST" "nc6 -x -l -p 8000 > $RPATH" &

sleep 1.5

pv "$LPATH" | nc6 -x "$HOST" 8000

wait $!

ssh "$USER@$HOST" "md5sum $RPATH" &

md5sum "$LPATH"

wait $!

I use pv to get a status of the transfer on my local machine and ssh to login

to the remote machine and start netcat in listening mode. After the transfer I

check the md5sum to be sure that everything went fine. This step can of course

be left out but during testing it was useful. Escaping of the arguments is done

with printf %q.

Problems with the above are the sleep, which can not be avoided but must be there to give the remote some time to start netcat and listen. This is unclean. A next problem with the above is, that one has to specify a username. Another is, that in scp, one has to double-escape the argument while above this is not necessary. The host that it netcats to is the same as the host it ssh's to. This is not necessarily the case as one can specify an alias in ~/.ssh/config. Last but not least this only transfers from the local machine to the remote host. Doing it the other way round is of course possible in the same manner but then one must be able to tell how the local machine is reachable for the remote host.

Due to all those inconveniences I decided not to expand on the above script.

Plus, rsync and nfs seem to perform well enough for day to day use.

a periodic counter

Fri, 18 May 2012 20:36 categories: codetldr: counting without cumulative timing errors

Sometimes I want just a small counter, incrementing an integer each second running somewhere in a terminal. Maybe it is because my wristwatch is in the bathroom or because I want to do more rewarding things than counting seconds manually. Maybe I want not only to know how long something takes but also for how long it already ran in the middle of its execution? There are many reason why I would want some script that does nothing else than simply counting upward or downward with some specific frequency.

Some bonuses:

- the period should be possible to give as a floating point number and especially periods of a fraction of a second would be nice

- it should be able to execute an arbitrary program after each period

- it should not matter how long the execution of this program takes for the overall counting

Now this can not be hard, right? One would probably write this line and be done with it:

while sleep 1; do echo $i; i=$((i+1)); done

or to count for a certain number of steps:

for i in `seq 1 100`; do echo $i; sleep 1; done

This would roughly do the job but in each iteration some small offset would be added and though small, this offset would quickly accumulate.

Sure that cumulative error is tiny but given that this task seems to be so damn trivial I couldn't bear anymore with running any of the above but started looking into a solution.

Sure I could just quickly hack a small C script that would check gettimeofday(2) at each iteration and would adjust the time to usleep(3) accordinly but there HAD to be people before me with the same problem who already came up with a solution.

And there was! The solution is the sleepenh(1) program which, when given the timestamp of its last invocation and the sleep time in floating point seconds, will sleep for just the right amount to keep the overall frequency stable.

The author suggests, that sleepenh is to be used in shell scripts that need to repeat an action in a regular time interval and that is just what I did.

The result is trivial and simple but does just what I want:

- the interval will stay the same on average and the counter will not "fall behind"

- count upward or downward

- specify interval length as a floating point number of seconds including fractions of one second

- begin to count at given integer and count for a specific number of times or until infinity

- execute a program at every step, optionally by forking it from the script for programs possibly running longer than the given interval

You can check it out and read how to use and what to do with it on github:

https://github.com/josch/periodic

Now lets compare the periodic script with the second example from above:

$ time sh -c 'for i in `seq 1 1000`; do echo $i; sleep 1; done'

0.08s user 0.12s system 0% cpu 16:41.55 total

So after only 1000 iterations, the counter is already off by 1.55 seconds. This means that instead of having run with a frequency of 1.0 Hz, the actual frequency was 1.00155 Hz. Is it too much to not want this 0.155% of error?

$ time ./periodic -c 1000

0.32s user 0.00s system 0% cpu 16:40.00 total

1000 iterations took exactly 1000 seconds. Cool.